Is Video Analytics Ready for the Mainstream?

When video analytics started making an appearance in the market, promises of

extraordinary capabilities had industry professional expectations high, but the

reality is they simply did not deliver. As with any new platform, there were

problems and early adopters still tell war stories about the failure of

first-generation systems, even as underlying technologies have enhanced the

capabilities of later-generation products. I think we all agree that marketing

hype often outpaces real-world success. But fortunately in this case, todays

analytics solutions are delivering proven performance at affordable prices.

In its short history, the potential of video analytics has progressed from

use in limited, niche applications to its current potential as a mainstream tool

within the reach of any video end user. Video analytics capabilities have

evolved from being almost exclusively a software add-on to large Video

Management Software driven systems to being embedded in products such as cameras

and recording devices. This shift in the deployment of video analytics is

changing the landscape for systems design.

In its short history, the potential of video analytics has progressed from

use in limited, niche applications to its current potential as a mainstream tool

within the reach of any video end user. Video analytics capabilities have

evolved from being almost exclusively a software add-on to large Video

Management Software driven systems to being embedded in products such as cameras

and recording devices. This shift in the deployment of video analytics is

changing the landscape for systems design.

Smarter cameras at the edge

Video motion detection was a forerunner of video content analysis, or video

analytics, and has been around for decades. Video cameras today can detect

motion easily and dependably, and, in fact, this feature is usually taken for

granted and not considered real video analytics. But video motion detection

performs the same primary function as more advanced video analytics, which is to

analyze the content of a video image and provide an alarm. The only difference

is that the video analytics inside smarter video cameras can effectively analyze

a more diverse range of data.

Video motion detection was a forerunner of video content analysis, or video

analytics, and has been around for decades. Video cameras today can detect

motion easily and dependably, and, in fact, this feature is usually taken for

granted and not considered real video analytics. But video motion detection

performs the same primary function as more advanced video analytics, which is to

analyze the content of a video image and provide an alarm. The only difference

is that the video analytics inside smarter video cameras can effectively analyze

a more diverse range of data. The processing power being built into new network cameras makes more advanced

analytics possible, and also plays a key role in the improvement of overall

image performance. For example, in-camera processing is used to manage the

dynamic range of images by correcting the balance of light and dark areas within

a scene, and can also stabilize images to offset wind vibration, among other

useful features. Smart cameras can also create privacy zones within images and

block the viewing area of specific areas in a scene such as, say, the windows of

an apartment complex.

The processing power being built into new network cameras makes more advanced

analytics possible, and also plays a key role in the improvement of overall

image performance. For example, in-camera processing is used to manage the

dynamic range of images by correcting the balance of light and dark areas within

a scene, and can also stabilize images to offset wind vibration, among other

useful features. Smart cameras can also create privacy zones within images and

block the viewing area of specific areas in a scene such as, say, the windows of

an apartment complex.

Relative to video analytics, advanced in-camera processing provides these

capabilities:

Object left behind. The camera can alarm if an object appears and remains in

a scene for longer than a pre-selected period of time.

Object left behind. The camera can alarm if an object appears and remains in

a scene for longer than a pre-selected period of time.

Object moved. An object in a scene can be identified and specified, and the

camera will alarm if the object is moved.

Virtual triplines. A directional line is specified and an alarm triggers in

the event something crosses the line. Alternatively, an area could be specified

and the alarm would indicate something has entered the area.

Object tracking. As an object or person moves thorough a scene, an alarm can

indicate if the direction has changed.

People tracking. By identifying people in a scene, video analytics can help

prevent loitering or tailgating (a person follows someone else through a gate or

door without presenting a credential).

People counting. The camera can identify the number of individuals or other

objects such as cars in a scene and count them automatically.

People counting. The camera can identify the number of individuals or other

objects such as cars in a scene and count them automatically.

Scene change detection. In the event a camera is tampered with or its field

of view obstructed, intelligent cameras can sense the change and generate an

alarm.

Identifying faces. The camera identifies a face and isolates it from the rest

of the video frame. These captured faces could be compared to face images stored

in a centralized database or even on a digital video recorder to provide face

recognition.

It is no longer necessary to specify specialized or expensive cameras to get

these capabilities. They are increasingly being included as standard features of

the new smarter, higher-resolution network cameras. It is also not difficult for

integrators of IP-based systems to implement these capabilities in existing or

new systems. The technologies are easy-to-program, robust, proven and do not add

appreciably to system costs. Adding video analytics at the camera level also

does not require additional server capacity.

Another system advantage of leveraging in-camera video analytics is the

ability to filter when video signals actually transmit over a network. Too much

video on a network, especially if the network is being shared by other

enterprise uses, can be a bandwidth and storage challenge. This is especially

true given the migration to megapixel video cameras and larger image sizes. Use

of an in-camera analytics alarm to trigger when video needs to go over the

network, or what video is recorded, can provide an attractive option to better

manage network resources.

Analyzing video at the network edge also ensures that all the information in

the video image is available to be analyzed, which is not the case for example

with compressed video traveling across a network. Use of video analytics at the

camera level is also ultimately scalable. You only have to use as much as you

need; for instance, you could start small and add additional smart cameras

specifically where you need them over time.

Centralized video analytics

As useful as in-camera analytics can be, it may not be the best approach for

every application. By their nature, the analytics inside cameras are limited in

terms of programmability and customization. Not every video analytics

application can be reduced to whether a line is crossed or whether an object is

moved. For more complex or application-specific needs, there are a variety of

third-party video analytics systems that are typically integrated as part of a

VMS system.

These analytics systems are more flexible and can be programmed for a wider

variety of uses and application environments. They can detect a range of

security threats in multiple locations, even if the threats are different at

each site. They can also be used with more complex scenarios, for example, to

incorporate a greater number of user specified parameters when deciding whether

to issue an alarm. And as good as the in-camera chips are, software-based

systems used with a server have much more processing power. Greater processing

expands the capabilities of these systems, although the greater functionality

has a price, both a software cost and a need for additional hardware. In terms

of upgrading the system, it is much easier to upgrade a centralized

software-based system than to manage the upgrade of possibly hundreds of cameras

located at the network edge. Maintenance and system management also tend to be

simpler with a centralized approach.

These analytics systems are more flexible and can be programmed for a wider

variety of uses and application environments. They can detect a range of

security threats in multiple locations, even if the threats are different at

each site. They can also be used with more complex scenarios, for example, to

incorporate a greater number of user specified parameters when deciding whether

to issue an alarm. And as good as the in-camera chips are, software-based

systems used with a server have much more processing power. Greater processing

expands the capabilities of these systems, although the greater functionality

has a price, both a software cost and a need for additional hardware. In terms

of upgrading the system, it is much easier to upgrade a centralized

software-based system than to manage the upgrade of possibly hundreds of cameras

located at the network edge. Maintenance and system management also tend to be

simpler with a centralized approach.

Deploying analytics spanned across multiple cameras is also a benefit of

centralized analytics. For instance, a centralized analytics system could check

for similar or related activities across a group of cameras to analyze

system-wide patterns that might prompt an alarm. Software to read license plate

numbers is mature and effective, and higher-resolution video images enables the

software to do an even better job. Another advantage of a centralized system is

the availability of a database to capture and compare multiple face images or

license numbers simultaneously in high traffic applications like toll plazas or

stadiums.

Important variables in server-based systems include compatibility with

cameras and other system components and the importance of image quality.

Higher-resolution video cameras provide better images and more information for

analytics systems. Systems receiving higher quality data are consequently more

effective.

Important variables in server-based systems include compatibility with

cameras and other system components and the importance of image quality.

Higher-resolution video cameras provide better images and more information for

analytics systems. Systems receiving higher quality data are consequently more

effective.

Server based systems have also matured and expanded their functionality.

False alarms, a problem with earlier systems, can be better managed using

analytics. Many of the systems are user-friendly and intuitive to operate. There

is also now a large installed base of video analytics systems, so users have an

opportunity to hear from their peers how these systems have improved since the

early trials and tribulations.

Cameras never blink

It has been decades since Sandia National Laboratories tested the

attentiveness of individuals tasked with watching video monitors for several

hours a day. Results of the Sandia test for the U.S. Department of Energy

estimated the attention span of anyone watching an inactive video screen to be

about 20 minutes. That is a scary number considering how many video screens

there are that are presumably being watched.

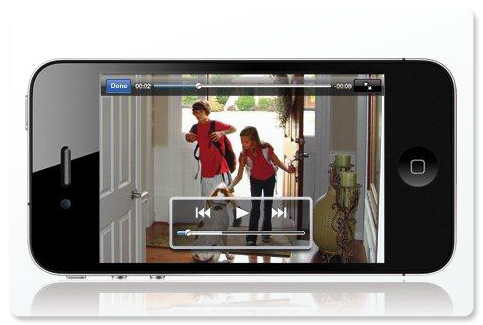

Video analytics functionality now provides an answer to the attention-span

dilemma. They can effectively analyze video data and alert an operator only when

there is something of interest to see. Technology will never replace the need

for human interaction and response, but video analytics has developed into a

useful tool to make security personnel more effective overall.

Comments

Post a Comment