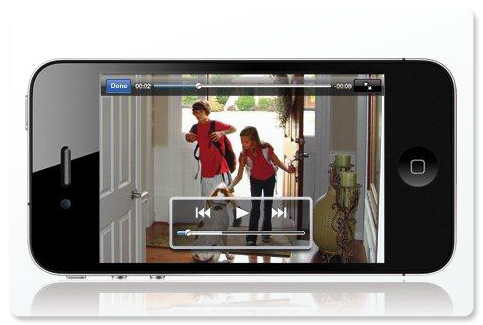

Security and Surveillance Cameras

Security and Surveillance Cameras are Uniquely Positioned to be Enhanced with More Intelligence

Capturing depth in addition to the usual two dimensions is already a must-have feature for systems where depth-sensing information combined with computing power enables functionality such as face-based unlocking, with additional AI that can distinguish between people, animals, and vehicles as well as recognizing familiar faces.

Depth Carries Critical Information

3D imaging is inspired by the most complex imaging device: the human eye. We have natural depth perception capabilities that help us navigate our world.

Many of today's devices translate the 3D world into a 2D image using 2D image recognition-based computer vision. The limitation in 2D technology is that a flat image does not recognize how far apart objects are inside a given scene.

Many cameras use a passive infrared (PIR) motion detection scenario to wake the camera or alert on activity. The PIR sensor only senses thermal motion changes in the scene. Changing the sensitivity setting only changes the level of thermal change to alarm, not how near the object is to the camera. In this case, a truck on the street, or a pet near the camera can cause false alarms. Too often, to remove these types of false alarms, we end up turning the sensitivity down to the point which prevents the system from detecting human presence.

Computer vision motion detection allows for greater analysis of the scene and for identification of the subject through advanced features such as person detection or facial recognition. This requires the full camera to run and to process the scene to determine if the objects of interest are within the scene. Each camera is then using a non-trivial amount of processing and can be fooled by pictures of human subjects. An additional issue with these features is their reliability which depends highly on the specifics of the algorithms used.

Using 3D imaging systems, one can add distance or depth information to each pixel on the RGB image and accurately measure the distances between objects in a complete scene in real-time. When a device reads images in 3D, it will not only know the colour or shape information from the flat image, but it will also know the positions and size information.

Comments

Post a Comment